...and it's only appropriate that it is about the fact that I've been hired by Autodesk.

Yes, you've seen Ken spill the beans over on CGTalk. It is true. Autodesk has hired me to handle rendering and funky stuff like that. Pixels. Whatnot. Friday sept 30:th is my last day at mental images/NVidia (which I end by doing the meet-and-greet-3d event in Berlin, see previous post), and Monday October 3:rd my first day at at Autodesk... this time around (I used to work for Autodesk in the past, doing mechanical design software).

This all started around some discussion between my good friends Neil Hazzard, Shane Griffith and myself back at EUE (all good friends which will now be my actual colleagues). It was thrown out as an idea, we pondered the thought, thought it made sense, and I talked to my managers at mental images/NVidia, and everybody thought it made a lot of sense.

Integration of mental ray and iray into Autodesk products have always been.. problematic. Integration work was done by Autodesk, but there was never a dedicated person with enough precise know-how of the nitty-gritty details to handle it (or sometimes simply not enough priority put on it). Well...... now I will be working there. 'nuff said :)

I think this will be interesting, and I've been "Shader Wizard" for mental images (now "NVidia ARC" - "Advanced Rendering Center") for 7+ years now, perhaps it's time to move forward to New Challenges. Todays physical rendering doesn't really require "shaders" in the same way it did in the past anyway..... 'tis all BSDF's and physics and shiny stuff :)

It's not like I'm moving far anyway. I will probably be in all the same meeting, just on the other "side"....

I think this will be really interesting, and the future is Bright (about 95000 cd/m^2 to be exact). Lets go there together.

With Sunglasses.

Also, as I type this I am finalizing some Really Cool Sh%t. Stay tuned - as always :)

/Z

zap's repository of mental ray tips and tricks, frequent questions and their answers, and some smoke and mirror mental ray trickery you may not find elsewhere...

Showing posts with label Max. Show all posts

Showing posts with label Max. Show all posts

2011-09-28

This is the 100:th post...

Labels:

appearances,

Autodesk,

Max,

mental images,

mental ray,

new job,

NVIDIA

2011-02-04

The Great Directory Migration - putting stuff in 3ds max 2011 (and newer)

I apologize that this blog post is way overdue.

What's this about?

All over the net (including this blog) you can find instructions in how to add various shaders to 3ds Max, by putting files in certain directories in the 3ds max directory structure, namely a "mentalray" directory (with various subdirectories) under your main 3ds max directory.

Nowdays, there are two things to watch out for:

GOTCHA #1: 64/32 Bitness

First is 32 vs 64 bit. In windows, programs on a 32 bit machine live under a directory that (on an english speaking computer) is called "C:\Program Files\"

This is also true for 64 bit programs on a 64 bit computer.

However, for 32 bit programs on a 64 bit computer the directory is called "C:\Program Files (x86)\" which may throw you off.

GOTCHA #2: 3ds Max 2011 (and newer)

When Autodesk introduced the MetaSL framework in 3ds Max 2011 (which means that shaders are not necessarily mental ray shaders per se) having a subdirectory to your max directory called "mentalray" doesn't really make sense any more. But since these both are mental images technologies, having a directory called "mentalimages" does make sense.

The change was made such that instead of a single "mentalray" directory under which the three categories of shaders (standard, autoload and 3rdparty), there is now instead a "mentalimages" directory. The three categories exist under this directory, similar to before.

However, under those three directories, the distinction between "mentalray" and "MetaSL" shaders has been added.

Under those you find various other subdirectories - which under the "mentalray" subdirectory hence includes the good old friends the "include" and "shaders" directories.

Under those you find various other subdirectories - which under the "mentalray" subdirectory hence includes the good old friends the "include" and "shaders" directories.

Example:

To add an .mi file like for example the skinplus.mi (a mental ray "include" file) shader to be automatically loaded on startup, you want it in the "include" directory under the "autoload" category.

In 3ds Max 2010 (or older) this would have been:

- C:\Program Files\Autodesk\3ds Max 2010\mentalray\shaders_autoload\include

In 3ds Max 2011 (and newer) this would instead be

- C:\Program Files\Autodesk\3ds Max 2011\mentalimages\shaders_autoload\mentalray\include

Basically, the translation would be that

- C:\Program Files\Autodesk\3ds Max 2010\mentalray\shaders_<category>\<dirname>

becomes

- C:\Program Files\Autodesk\3ds Max 2011\mentalimages\shaders_<category>\mentalray\<dirname>

I hope this helps installing various shaders and other goodies in 3ds Max 2011 and beyond.

/Z

Labels:

3ds Max 2010,

3ds Max 2011,

Max,

mental ray,

shaders

2009-05-15

3ds Max 2010, MetaSL and mental mill

Wouldn't it be fun if....

While working in the viewport wouldn't it be nice if the thing you rendered was faithfully represented in the viewport? At least as faithfully as technically possible?

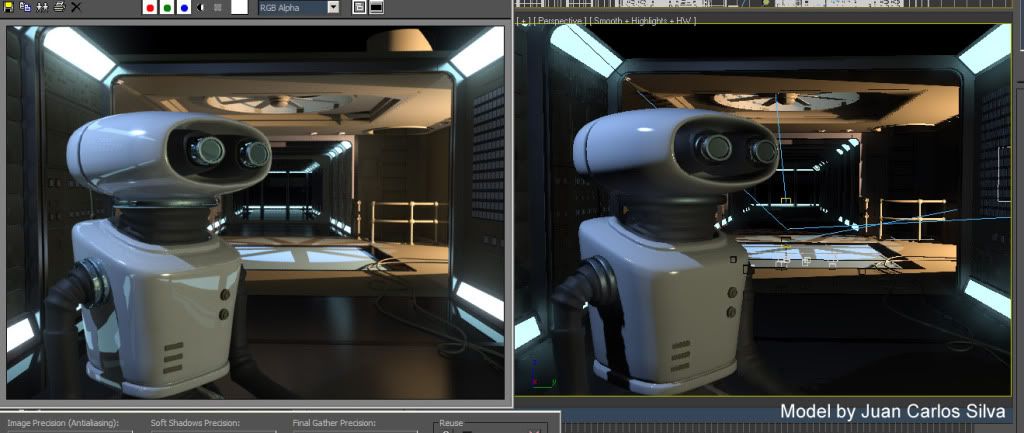

Wouldn't it be neat if the left part of the below was the final render and the right part would be what you saw in the viewport, while working with it?

(click to enlarge)

Oh wait - that is exactly how it looks in 3ds Max 2010! Oh no, how can this be? Is it magic? Is it elves? No, it's MetaSL, and mental images mental mill technology.

What happens in 3ds Max 2010 is that several of the shaders has been given an implementation in the MetaSL shading language. MetaSL is mental images renderer agnostic shading language. When this shading language is taken through the mental mill compiler, out the other end drops something that can fit multiple different graphics hardware, as well as several different renderers!

This way, no matter if the graphics hardware is NVidia or ATI, you will see the same thing (or as close as the card can afford to render) using only a single MetaSL source shader.

Viewport accuracy

Look at this image, which is the difference between the render (on the left) and the viewport in the previous version, 3ds Max 2009:

(click to enlarge)

Notice how horrible the viewport (on the right) looks, how harshly lit, unrealistic, and oversaturated it looks? The thing to take home from this is that the main reason that this looks massively different is that the image on the right is neither gamma corrected, nor tone mapped.

I mention this to illustrate the importance, nay, imperativeness of using a proper linear workflow, with a gamma corrected and tone-mapped image pipeline.

So while the lighting and shading itself is much more accurate in 2010 than 2009, the key feature that really causes the similarity between the render and the viewport is the tone mapping and gamma correction.

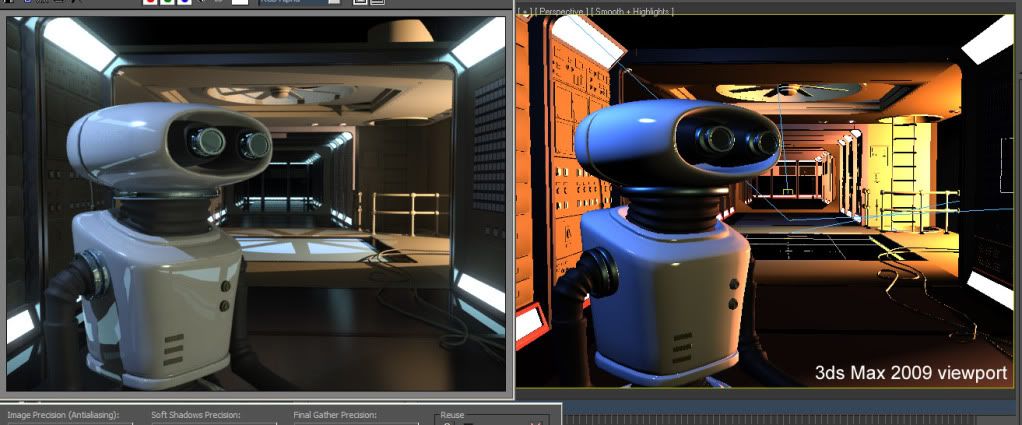

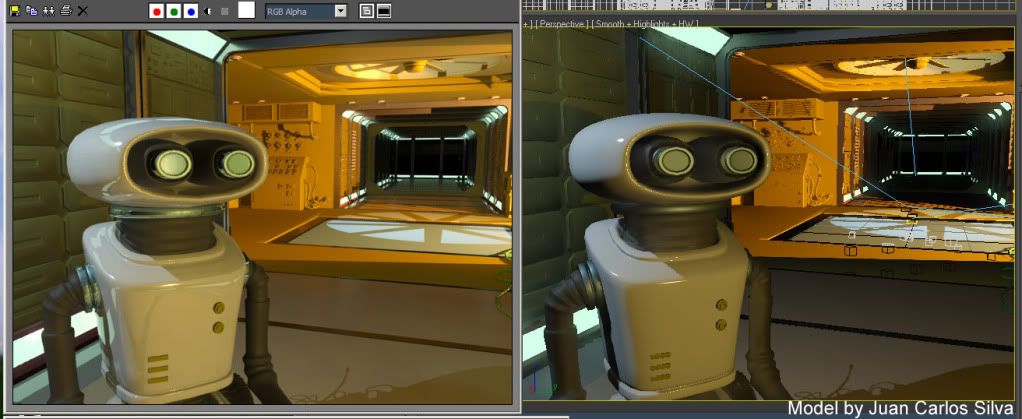

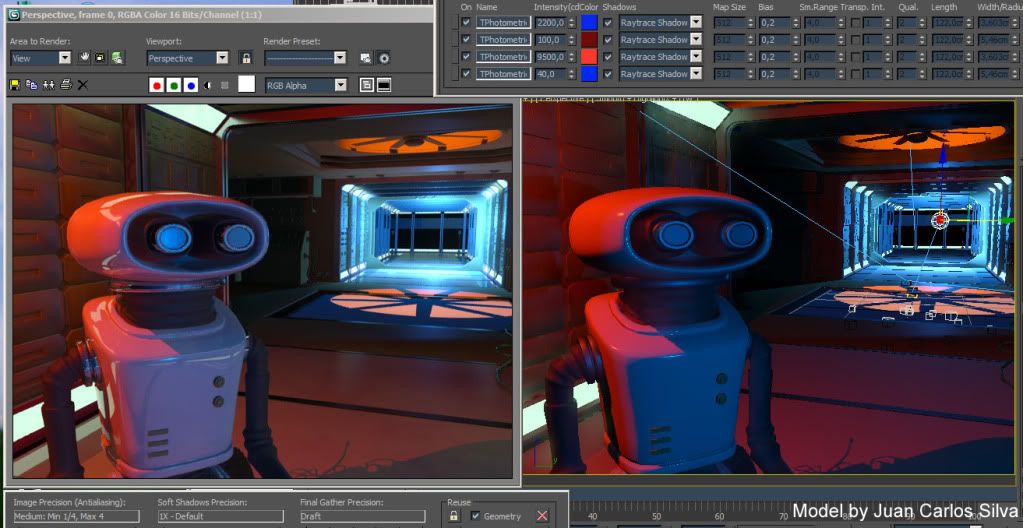

But naturally, the additional accuracy of the shading and lighting drives this home even more: See below a couple of different lighting scenarios, and see how well they match the render (again, render on the left, viewport on the right):

(click to enlarge)

After playing around a bit (tip; use the 3ds Max "light lister", you can get:

(click to enlarge)

So, using this feature, with the help of the MetaSL technology, you can make a decent set of lighting decisions and "look development" in realtime in the viewport. That's pretty neat, if I may say so myself ;)

So what about mental mill

Now nothing of the above is the end-user using mental mill. It is still using the mental mill compiler "under the hood"; MetaSL versions of 3ds Max shaders are compiled for the hardware, and used in the viewport.

But in 3ds Max 2010, the end user can also use MetaSL and mental mill directly. And yes, not only for hardware rendering in the viewport, but for mental ray rendering as well!!

First, please note that this is a 1st step integration of mental mill into 3ds Max. It has some issues (we know some quite well), and even a couple of bugs snuck into the final release (we are also very aware of these). But the general workflow is that you:

- Create a shade tree inside the mental mill Artist Edition that ships with 3ds Max

- Save this as an .xmsl file

- Insert a "DirectX material" (yes, this makes you think this is only for HW rendering, since in the past "DirectX material" was only used for such things)

- Load the .xmsl file into it

- See the material in the viewport and render it with mental ray!

Now, as I mentioned above, there are some known issues in this 1st integration, to be aware of:

- In mental mill you always have to build a Phenomena, not just a free standing shade tree. So your workspace in the mill should contain a single Phenomena representing your new material.

- The last node must have a single output. This is actually a bug, and it will be fied, but for now, if your root node has many outputs (like for example the Illumination_Phong does), just pipe it's main output through some other shader (like Color_Brighness or similar) to make sure the final output node only has a single output.

- Due to a difference in texture coordinate handling in 3ds Max mental ray and MetaSL, UV coordinates must be connected explicitly. So if you include, say, a texture looup node, you must include a "State_uv_coordinate" node to feed it coordinates. Inside mental mill you will really not see any difference, since the built in "default UV's" work there, but without doing this mental ray will render it incorrectly.

- There was recently discovered an issue with localization; it seems that if your Windows system is set to use "," rather than "." for the decimal separator, this causes an error in interpreting some MetaSL code. For now, the workaround is to change your windows decimal separator settings to "."; sorry for the inconvenience :(

- While you can change the shader in the mill and re-load it into the DirectX material and see the viewport update, the mental ray loaded version of the shader will not update automatically; be careful about this. You can force an update by renaming the phenomena and the file so mental ray loads it as a "new" shader.

Having taken the above things into account, though, your MetaSL shader should render pretty much exactly the same in mental ray as they appear in the viewport!

Some really snazzy things can be rendered thusly.

mental mill Standard Edition

The "Artist Edition" of mental mill sipping with 3ds Max can only work with the shipping nodes, not custom MetaSL nodes. It can also only export hardware shaders. (While this may sound like a contraditction to what I said above, note that you are not exporting the shader from mental mill in the 3ds Max 2010 workflow, you are saving the mental mill project (.xmsl) itself, and it is 3ds Max that is able to load this and render it in mental ray.)

If, however, you are a shader developer that want to write custom MetaSL shaders and render these in both mental ray and see them in the viewport, you need the mental mill "Standard Edition". This product can be purchased over the newly snazzily updated www.mentalimages.com website.

Enjoy the fun!

/Z

Labels:

3ds Max 2010,

Max,

mental mill,

mental ray MetaSL,

shaders

2008-08-01

mrMaterials.com & The Floze Tutorials

mrMaterials.com

Last week, the site mrmaterials.com opened officially to the public, so you can actually up- and download materials there as well as (like I mentioned in my last post) mymentalray.com. So don't be shy, up & download those nice mental ray materials as much as you like!

The Floze Tutorials

Florian Wild, most known as "Floze" online, has put together an outstanding set of tutorials for mental ray about rendering various types of environments. These are, in order:

Sunny Afternoon

Florian has undoubtedly done a great job on these, and they are very good reading, and even though they are written for Maya, mental ray is mental ray, so the basic techniques can be transported to XSI, 3ds Max etc. just as well.

Luckily, Floze has already done the thinking for you here as well, and this is available in eBook format from www.3dTotal.com for £8.55 (which I think he is well worth).

Enjoy

Stuff

Siggraph is closing in. I plan to do a bit of "reporting" from it on twitter, (so follow me there), and I may even, if I get extra crazy, do some QiK coverage. We'll see....

...until next time - keep tracing. ;)

/Z

Labels:

appearances,

blog,

conferences,

Floze,

glossy reflections,

Jeff Patton,

material libraries,

Max,

Maya,

mental ray,

mia_material,

XSI

2008-06-27

Layer all your Love on Me, MyMentalRay, Material Libraries and.... Stuff

MyMentalRay.com

First, before I start todays post, I advise everyone to run over to the freshly updated mymentalray.com which has a brand new fresh coat of paint, an updated material library, dynamic content, and a lot of other spiffy stuff.

Take note especially at the start of the mental ray material library over there. It's not the only effort of it's kind. My pal Jeff Patton is involved with a second very similar effort known as mrmaterials.com.

Simple Layering

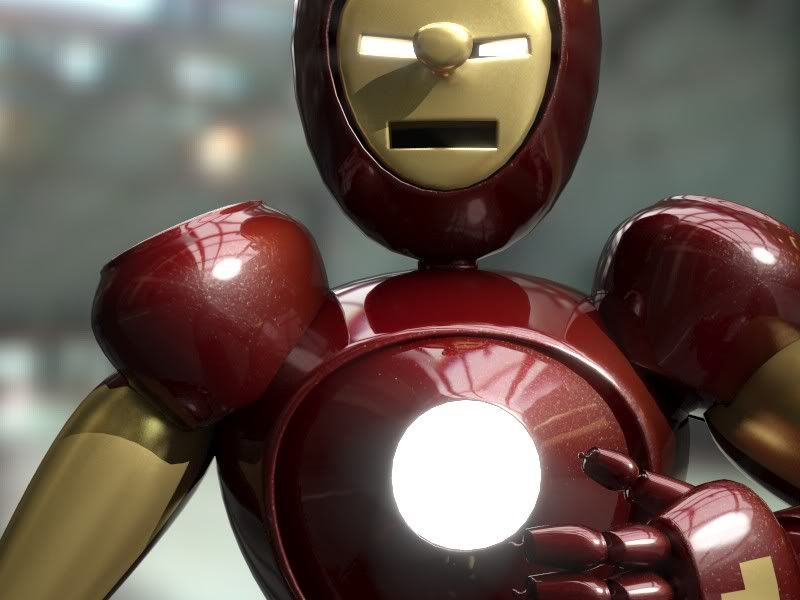

I keep getting questions about layring (probably sparked by the recent writings about Iron Man) where you want to use something like Arch&Design (mia_material) to give both a broad specular glossy highlight, and a 2nd layer of a much more shiny "clearcoat" style material.

Well, nothing is stopping you from doing exactly that. I made this Iron Man material (apologies in advance to Ben Snow, and to all you guys for my lack of modelling sKiLz :) )

This isn't high art, but is there to demonstrate the concept. In most applications like Maya and XSI, you can easily blend materials using various blending nodes. But I did this in 3ds Max, and it's a little bit more difficult in a sense. I used the 3ds Max Blend material for this.

The trick is to understand that the 3ds Max Blend doesn't just *add* two materials (that's what the Shellac does), but it interpolates between them. This is generally better, because you do not break any energy conservation laws! (Also it works with photons that way!). Shellac, while nice, makes it really easy to make nonphysical materials.

However, since you are working with Blend you will have to take this into account. Here is an example:

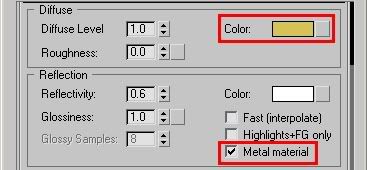

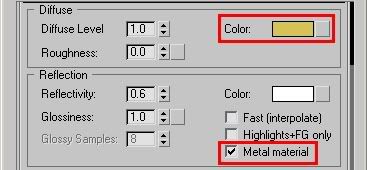

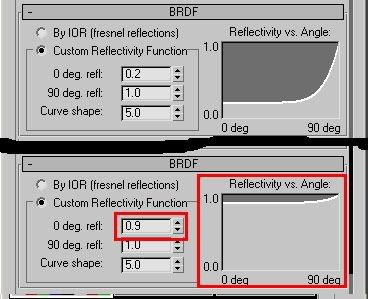

From left to right:

- Left: A completely mirroring Arch&Design material

- Center: A glossy Arch&Design material in "Metal" mode

- Right: a Blend material using the above two and a Falloff in Fresnel mode as the blend map.

A couple of things to note about this:

- The "common mistake" people do is to make one material that is a shiny material with a falloff for the clearcoat, and completely reflective (glossy) material for the "base" material, and then try to blend these two with Shellac.

This very easily causes nonphysical reasults on edges. Instead doing a Blend between 100% reflective and the "base surface" makes sure to keep the energy in check. - Note that for metallic colored objects, their color does affect the color of reflection, but for dielectric objects the reflection is always white.

You do this by having the "Metal" mode turned on for the base objects (so reflection color is taken from diffuse color) but OFF for the clearcoat (which is a dielectric). This way you get the proper "uncolored" sharp reflections on top of "colored" glossy reflections.

In this particular "Iron Man" material, I also utilized pieces of the car paint shader to get the "flakes" (although the movie version didn't really have any) in the paint. For that I only utilized a single component of car paint (flakes) and had pretty much everything else coming from Arch&Design. For this reason I did use Shellac to layer the car paint with the Arch&Design.

More advanced Layering

This "Blend" technique can be used in multiple layers, witness this (again apologies for my modelling skills :) )

This image originated from a question I got about making "greasy brass", so I made this material (also available on mymentalray.com) which doesn't just blend two things together, but several. Without going too deep into the technique, I am blending between the metal layer, and two different "grease" layers, here with different bump maps.

The trick to keep straight in your head is to make each "layer" look as if it was only made of the given sub-material, and let the blending between the layers handle the weighting (and, unless you "know what you are doing", avoid blendings where they sum up to more than 100%. This is automatic in 3ds Max when using "Blend" but in Maya or XSI - or in 3ds Max when using "Shellac" - it's easy to run amok with the levels)

As always with metal, having something interesting to reflect (i.e. some HDRI environment or similar). But I covered that in an earlier episode (see also here about glossy materials).

...and Other Stuff(tm)

In other news:

- On popular demand I updated the new skinplus.mi file mentioned in a previous post so it now has a special version that does displacement. See the (updated) post.

- For those who has missed it, go over to FXGuide.com where there is lots of cool coverage of the vfx business. Also, if you aren't subscribing to CineFex, well, then you should.

Thanks for listening. As always, following me on Twitter is a great idea, especially as SIGGRAPH draws closer. I'll be Tweeting my location regularly while in L.A.

/Z

Labels:

glossy reflections,

Jeff Patton,

material libraries,

Max,

Maya,

mental ray,

mia_material

2008-05-08

SIGGRAPH 2008 talks

Phew. I just finalized the course notes for my SIGGRAPH talks.

As mentioned before, I will be holding an Autodesk masterclass with the title of "Miracles and Magic: mental ray technology in Photo-real Rendering for Production". This will - on popular demand - be a lot about gamma and linear workflow (I actually clocked the pure presentation "talky" part on this segment alone at 30 minutes, sans practical demo bit!).

Beyond the LWF/Gamma stuff, it'll talk about CG/Live Action integration, and tips for rendering flicker-free animations, and some other stuff (assuming I can get through it all in time ;) ).

Unless things change, the masterclass itself is from 1:30PM to 3:00PM on Thursday, August 14:th. There is also a "QA with all the masterclass presenters" on Wednesday, August 13:th at 9:00AM that I will also participate in. The full agenda and more info is here.

Furthermore, I will also be co-teaching a SIGGRAPH course entitle "HDRI for Artists" together with Kirt Witte (Savannah College), Hilmar Koch (ILM), Gary Davis (Autodesk) and Christian Bloch (HDRLabs).

The class is set for Monday, 8:30-12:15 in room 502A.

To make it easier to track these things, I've added a twitter feed, plus I gave this place a new, short, easy-to-remember URL:

www.mentalraytips.com

Enjoy.

/Z

As mentioned before, I will be holding an Autodesk masterclass with the title of "Miracles and Magic: mental ray technology in Photo-real Rendering for Production". This will - on popular demand - be a lot about gamma and linear workflow (I actually clocked the pure presentation "talky" part on this segment alone at 30 minutes, sans practical demo bit!).

Beyond the LWF/Gamma stuff, it'll talk about CG/Live Action integration, and tips for rendering flicker-free animations, and some other stuff (assuming I can get through it all in time ;) ).

Unless things change, the masterclass itself is from 1:30PM to 3:00PM on Thursday, August 14:th. There is also a "QA with all the masterclass presenters" on Wednesday, August 13:th at 9:00AM that I will also participate in. The full agenda and more info is here.

Furthermore, I will also be co-teaching a SIGGRAPH course entitle "HDRI for Artists" together with Kirt Witte (Savannah College), Hilmar Koch (ILM), Gary Davis (Autodesk) and Christian Bloch (HDRLabs).

The class is set for Monday, 8:30-12:15 in room 502A.

To make it easier to track these things, I've added a twitter feed, plus I gave this place a new, short, easy-to-remember URL:

Enjoy.

/Z

Labels:

appearances,

gamma,

linear workflow,

Max,

Maya,

production library,

siggraph

2008-04-24

Beauty isn't only Skin Deep: combining fast SSS with mia_material (A&D)

UPDATE 2008-06-27: The file is now updated to support also support displacement in a new 'SSS Fast Skin+ (w. Disp)' material

UPDATE 2011-02-04: This is now updated with correct paths for 2011 versions

The image on the right is by Jonas Thörnqvist, an increadibly talented 3d Artist, and it is created using the mental ray skin shader.

Seeing some recent work from Jonas reminded me that I made some promises back in the day in this blog about tips on how to combine the "fast SSS skin" shader with the nice glossy reflective capabilities of the mia_material (i.e. "Arch&Design" in 3ds Max speak).

The trick w. the skin shader is that it uses light mapping. So the "material" that uses it must connect both a "surface shader" (the one which creates the actual shading) and a "lightmapping shader" (which is what pre-bakes the irradiance to scatter).

This is a tad tricky to do manually, and for this reason the skin shader is supplied as what is called in mental ray parlance a "material phenomenon". Well, suffice to say... it does all the magic for you! You don't need to think.

However, in some applications (notably Maya) this is different, and the skin shader comes shipped as a separate light mapping node and shader node, and there are scripts set up to combine them. Similarily for XSI, there exists a "split" solution already.

But the poor Max users are left behind. Like tears in the rain.

This is because a "material phenomenon" can't easily be combined with other things... coz it's a "whole complete package". And due to the peculiar requirements of the skin shader, it will not work if it is a "child" of some other material (like a Blend material in 3ds Max, or similar). So it's a wee bit hard to do from the UI.

However, what is not hard to do, is to actually write a different Phenomenon! As a matter of fact it is so simple, that I thought people would hop about doing exactly that left right and center. And indeed, Jonas, mentioned above, done exactly that. Which is why his renders are so cool.

However, what is not hard to do, is to actually write a different Phenomenon! As a matter of fact it is so simple, that I thought people would hop about doing exactly that left right and center. And indeed, Jonas, mentioned above, done exactly that. Which is why his renders are so cool.

It so happens I've had a modified version of the skin phenomena cluttering my harddisk for some time now... I just havn't gotten around to posting it before.

So, without further ado, here it is. It's experimental. It's unofficial. It's unsupported. If it makes your computer explode, so be it. Don't say I didn't warn you. Making no promises it even works. Etc.

Take the file skinplus.mi and save in your 3ds Max mental ray shader include autoload directory, i.e. it's generally something like:

For 3ds Max versions 2010 or earlier:

Jonas "Incredible Hulk"

UPDATE 2011-02-04: This is now updated with correct paths for 2011 versions

The image on the right is by Jonas Thörnqvist, an increadibly talented 3d Artist, and it is created using the mental ray skin shader.

Seeing some recent work from Jonas reminded me that I made some promises back in the day in this blog about tips on how to combine the "fast SSS skin" shader with the nice glossy reflective capabilities of the mia_material (i.e. "Arch&Design" in 3ds Max speak).

The trick w. the skin shader is that it uses light mapping. So the "material" that uses it must connect both a "surface shader" (the one which creates the actual shading) and a "lightmapping shader" (which is what pre-bakes the irradiance to scatter).

This is a tad tricky to do manually, and for this reason the skin shader is supplied as what is called in mental ray parlance a "material phenomenon". Well, suffice to say... it does all the magic for you! You don't need to think.

However, in some applications (notably Maya) this is different, and the skin shader comes shipped as a separate light mapping node and shader node, and there are scripts set up to combine them. Similarily for XSI, there exists a "split" solution already.

But the poor Max users are left behind. Like tears in the rain.

This is because a "material phenomenon" can't easily be combined with other things... coz it's a "whole complete package". And due to the peculiar requirements of the skin shader, it will not work if it is a "child" of some other material (like a Blend material in 3ds Max, or similar). So it's a wee bit hard to do from the UI.

However, what is not hard to do, is to actually write a different Phenomenon! As a matter of fact it is so simple, that I thought people would hop about doing exactly that left right and center. And indeed, Jonas, mentioned above, done exactly that. Which is why his renders are so cool.

However, what is not hard to do, is to actually write a different Phenomenon! As a matter of fact it is so simple, that I thought people would hop about doing exactly that left right and center. And indeed, Jonas, mentioned above, done exactly that. Which is why his renders are so cool.It so happens I've had a modified version of the skin phenomena cluttering my harddisk for some time now... I just havn't gotten around to posting it before.

So, without further ado, here it is. It's experimental. It's unofficial. It's unsupported. If it makes your computer explode, so be it. Don't say I didn't warn you. Making no promises it even works. Etc.

Take the file skinplus.mi and save in your 3ds Max mental ray shader include autoload directory, i.e. it's generally something like:

For 3ds Max versions 2010 or earlier:

- c:\Program Files\Autodesk\3ds Max ????\mentalray\shaders_autoload\include\

- c:\Program Files\Autodesk\3ds Max ????\mentalimages\shaders_autoload\mentalray\include\

Labels:

Max,

mental ray,

mia_material,

skin shader,

sss

2008-04-18

3ds Max 2009 released: mr Proxies and Other Fun Stuff

You may not have noticed, but a new release of 3ds Max has seen the light of day, known as "3ds Max 2009" (+/- a "Design" suffix).

"What, already?" I hear you ask. Yeah - indeed. This was a shortened development cycle to realign the release date of 3ds Max with other Autodesk products. Disregarding the marketing talk, it means that stuff had to happen in six months that normally takes a year. And being heavily involved in this release, I can say that I am still sweating from the workload....

For mental ray nuts such as yourself, there's some fun new stuff in 2009. Perhaps, most notably, is the hotly requested "mental ray proxies".

What is a "mental ray proxy", you ask?

Well, technically, it's a render-time demand-loaded piece of geometry. The particular implementation chosen for 3ds Max is in the form of a binary proxy. This means that the mental ray render geometry data is simply dumped to disk as a blob of bytes together with a bounding box. These bytes can then be read in... but not until a ray actually touches the bounding box!

Normally, geometry lives in the 3ds Max scene, and is then translated to mental ray data. So it means the object effectively lives twice in memory, once in 3ds Max, and once as the mental ray "counterpart". Not only does the proxies remove the translation time, it actually removes the need for the object to exist in all it's glory in 3ds Max; there is only a lightweight representation of the object in the scene, that can be displayed as a sparse point cloud so you can "sort of see what it is", and work the scene at interactive rates. Not until the object is actually needed for render is it even loaded into memory, and when it is no longer needed, it can be unloaded again to make room for other data.

One neat feature with the proxies is that they can be animated, i.e. mesh deformations can be stored (you can naturally just move the instances themselves around normally without having to save them as "animated" proxies, as a matter of fact, instance transformation is not baked into the file, only the deformations).

You can think of it as a point-cache on stereoids, because the entire mesh is actually saved - which means that topology changing animation (such as, say, a fluid sim) can be baked to proxies. Naturally, it'll eat lots of disk ... but it's possible. The animation can be re-timed and ping-pong'ed (so you can make, say, swaying trees more easily).

Now, creating proxies in the shipping 3ds Max 2009 is a bit of a multi-step process. I wrote a little script to simplify that, but it wasn't ready in time to make it into the shipping 2009, so you can find it here:

Now you should be able to right-click an object, and get a "Convert Object(s) to mental ray Proxy" option.

This allows you to convert an object and replace the original with a mr Proxy. Note this removes your original, replaces it (and all it's instances) with the proxy. It retains all transformation animation, children and parent links in all the instances. Now be aware your original is thrown away - do don't do this on some file which you do not have a saved copy of your carefully crafted object!!!

You can also select multiple objects for baking to proxies. This, however, works slightly differently. Instead of just a filename, you are asked for a file prefix, and the actual object name in the scene is then appended to that name.... so if your prefix is "bob", then "Sphere01" is saved as "bobSphere01.mib".

Now, this is an unsupported experimental tool. Be aware it will delete your original Object(s) - so save your original scene. It may have a gazillion of bugs, misfeatures, and may cause your computer to explode. There is no warranty that it'll even execute. But if you find it useful.... enjoy. ;)

Now the next question I invariably get is this: Can you instantiate proxies as particles? I doesn't seem to work?

The story is this. Back in the day, 3ds Max had issues with handling many objects. Many polygons was easier for it to handle. And even today, I guess a million single-polygon objects will be much slower than a single million-polygon object, due to the per-object overhead.

Many (if not all) the 3ds Max tools, including particle systems, were written with this in mind. So, for example, instantiating an object into a particle system means that the mesh itself is copied. So when you have a box, and instantiate this into a particle system w. 1000 particles, this doesn't really make 1000 boxes. It actually makes a single mesh containing the faces copied off the 1000 boxes.

Since the mr Proxies aren't meshes (they have no geometry as seen from inside max, they are helpers), they can't be copied as meshes. It won't work!

Luckily, the planet is filled with Smart People. One of these Smart People is named Borislav "Bobo" Petrov, and if you've ever used a MaxScript, you've heard of him.

Naturally, Bobo has a solution. Check out this post on CGTalk, where Bobo posts a script which can bake any scene instance to a particle flow particle system.

The script creates real and true instances of the objects, rather than trying to "steal the mesh faces". And by virtue of doing true instances, it works perfectly with the mr Proxies.

I honestly have no idea what the 3ds Max community would even do without Bobo, he's such a phenomenal resource.

So, there you go. Have fun with 3ds Max 2009 and PFlow'in your proxies....

/Z

"What, already?" I hear you ask. Yeah - indeed. This was a shortened development cycle to realign the release date of 3ds Max with other Autodesk products. Disregarding the marketing talk, it means that stuff had to happen in six months that normally takes a year. And being heavily involved in this release, I can say that I am still sweating from the workload....

For mental ray nuts such as yourself, there's some fun new stuff in 2009. Perhaps, most notably, is the hotly requested "mental ray proxies".

What is a "mental ray proxy", you ask?

mental ray proxies

Well, technically, it's a render-time demand-loaded piece of geometry. The particular implementation chosen for 3ds Max is in the form of a binary proxy. This means that the mental ray render geometry data is simply dumped to disk as a blob of bytes together with a bounding box. These bytes can then be read in... but not until a ray actually touches the bounding box!

Normally, geometry lives in the 3ds Max scene, and is then translated to mental ray data. So it means the object effectively lives twice in memory, once in 3ds Max, and once as the mental ray "counterpart". Not only does the proxies remove the translation time, it actually removes the need for the object to exist in all it's glory in 3ds Max; there is only a lightweight representation of the object in the scene, that can be displayed as a sparse point cloud so you can "sort of see what it is", and work the scene at interactive rates. Not until the object is actually needed for render is it even loaded into memory, and when it is no longer needed, it can be unloaded again to make room for other data.

One neat feature with the proxies is that they can be animated, i.e. mesh deformations can be stored (you can naturally just move the instances themselves around normally without having to save them as "animated" proxies, as a matter of fact, instance transformation is not baked into the file, only the deformations).

You can think of it as a point-cache on stereoids, because the entire mesh is actually saved - which means that topology changing animation (such as, say, a fluid sim) can be baked to proxies. Naturally, it'll eat lots of disk ... but it's possible. The animation can be re-timed and ping-pong'ed (so you can make, say, swaying trees more easily).

Making proxies

Now, creating proxies in the shipping 3ds Max 2009 is a bit of a multi-step process. I wrote a little script to simplify that, but it wasn't ready in time to make it into the shipping 2009, so you can find it here:

- Download mental ray-mrProxyMake.mcr

- Launch max, and on the MaxScript menu choose "Run Script" and pick the file. By doing this, it should now have installed itself.

- Now open your "Customize" memory, the "Customize User Interface"

- Choose the "Quads" tab

- On the left, choose the category "mental ray"

- In the list that appears, you'll find a "Convert Object(s) to mental ray Proxy". Make sure the one with the plural "s" on "Object(s)", if you find one without s it is the shipping one which is not so fun. ;)

- Drag it to a quad menu of your choice - done!

Now you should be able to right-click an object, and get a "Convert Object(s) to mental ray Proxy" option.

This allows you to convert an object and replace the original with a mr Proxy. Note this removes your original, replaces it (and all it's instances) with the proxy. It retains all transformation animation, children and parent links in all the instances. Now be aware your original is thrown away - do don't do this on some file which you do not have a saved copy of your carefully crafted object!!!

You can also select multiple objects for baking to proxies. This, however, works slightly differently. Instead of just a filename, you are asked for a file prefix, and the actual object name in the scene is then appended to that name.... so if your prefix is "bob", then "Sphere01" is saved as "bobSphere01.mib".

Disclaimer

Now, this is an unsupported experimental tool. Be aware it will delete your original Object(s) - so save your original scene. It may have a gazillion of bugs, misfeatures, and may cause your computer to explode. There is no warranty that it'll even execute. But if you find it useful.... enjoy. ;)

What about particles? Pflow?

Now the next question I invariably get is this: Can you instantiate proxies as particles? I doesn't seem to work?

The story is this. Back in the day, 3ds Max had issues with handling many objects. Many polygons was easier for it to handle. And even today, I guess a million single-polygon objects will be much slower than a single million-polygon object, due to the per-object overhead.

Many (if not all) the 3ds Max tools, including particle systems, were written with this in mind. So, for example, instantiating an object into a particle system means that the mesh itself is copied. So when you have a box, and instantiate this into a particle system w. 1000 particles, this doesn't really make 1000 boxes. It actually makes a single mesh containing the faces copied off the 1000 boxes.

Since the mr Proxies aren't meshes (they have no geometry as seen from inside max, they are helpers), they can't be copied as meshes. It won't work!

Luckily, the planet is filled with Smart People. One of these Smart People is named Borislav "Bobo" Petrov, and if you've ever used a MaxScript, you've heard of him.

Naturally, Bobo has a solution. Check out this post on CGTalk, where Bobo posts a script which can bake any scene instance to a particle flow particle system.

The script creates real and true instances of the objects, rather than trying to "steal the mesh faces". And by virtue of doing true instances, it works perfectly with the mr Proxies.

I honestly have no idea what the 3ds Max community would even do without Bobo, he's such a phenomenal resource.

So, there you go. Have fun with 3ds Max 2009 and PFlow'in your proxies....

/Z

Labels:

large scenes,

Max,

memory,

mental ray,

particle systems,

PFlow,

proxies

2008-02-19

Why does mental ray Render (my background) Black? Undestanding the new Physical Scale settings

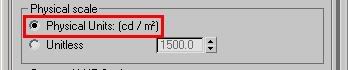

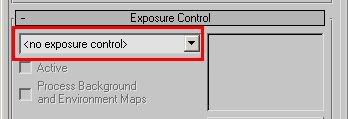

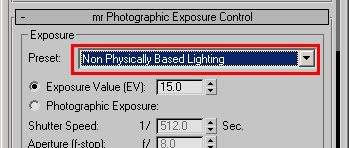

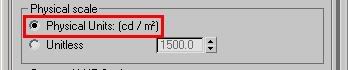

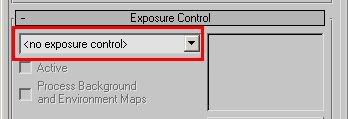

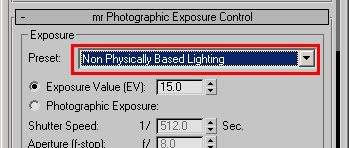

The most common "complaints" I get about mental ray rendering in 3ds Max 2008 is "it's washed out" and "it Renders black". The answer to the former is "you are not handling your gamma correctly", and will be thorougly covered in the future. The answer to the latter is "You are not understanding the Physical Scale" settings. Which is what we're going to talk about now.

Without going too deep into the physics of photometric units (we did that already) we must understand that a normal color, an RGB value, i.e. a pixel color of some sort, can, fundamentally represent one of two different things:

The "luminance" of something is simply how bright it looks. It's probably the most intuitively understandable lighting unit. Basically, you can say that when you are looking at the pixels your digital camera spit out on your computer, you are looking at pixels as individual luminance measurements, converted back to luminance variations by your screen.

I.e. a given luminance of some real world object flows in through the lens of your camera, hits the imaging surface (generally a CCD of some kind) and gets converted to some value. This value (for the moment ignoring things like transfer curves, gamma, spectral responses and whatnot) is, in principle, proportional to the luminance of the pixel.

Now "reflectance" is how much something reflects. It is often also treated in computer graphics as a "color". Your "diffuse color" is really a "diffuse reflectance". It's not really a pixel value (i.e. a "luminance") until you throw some light at it to reflect.

And herein lies the problem; traditionally, computer graphics has been very lax with treating luminance and reflectance as interchangeable properties, and it's gotten away with this because it has ignored "exposure", and instead played with light intensity. You can tell you are working with "oldschool" rendering if your lights is of intensities like "1.0" or "0.2" or something like that, in some vague, unit-less property.

So when working "oldschool" style, if you took an image (some photo of a house, for example) and applied as a "background", this image was just piped through as is, black was black, white was white, and the pixels came through as before. Piece of cake.

Also, if you took a light of intensity "1.0" that shone perpendicular on a diffuse texture map (some photo of wood, I gather) will yield exactly those pixels back in the final render.

Basically, the wood "photo" was treated as "reflectance", which was then lit with something arbitrary ("1.0") and treated on screen as luminance. The house photo, however, was treated as luminance values directly.

But here's the problem: Reflectance is inherently limited in range from 0.0 to 1.0... i.e. a surface can never reflect more than 100% of the light it receives (ignoring fluorescence, bioluminescence, or other self-emissive properties), whereas actual real-world luminance knows no such bounds.

Now consider a photograph... a real, hardcopy, printed photograph. It actually reflects the light you shine on it. You can't watch a photograph in the dark. So what a camera does is to take values that are luminances, take it through some form of exposure (using different electronic gains, chemical responses, irises to block light, and modulating the amount of time the imaging surfaces is subjected to the available luminances). Basically, what the camera does, is to take a large range (0-to-infinity, pretty much) and convert it down to a low rang (0-to-1, pretty much).

When this is printed as a hardcopy, it indeed is basically converting luminances, via exposure, into a reflectance (of the hardcopy paper). But this is just a representation. How bright (actual luminance) the "white" part of the photographic hardcopy will be depends on how much light you shine on the photograph when you view it - just like how bright (actual lumiance) the "white" part of the softcopy viewed on the computer depends on the brighness knob of the monitor, the monitors available emissive power, etc.

The funny thing with the human visual system is that it is really adaptive, and can view this image and fully understand and decode the picture even though the absolute luminance of the "white" part of the image varies like that. Our eyes are really adaptive things. Spiffy, cool things.

So, the flow of data is:

In the oldschool world we could draw a near equivalence between the "reflectance" interpretation and the "luminance" interpretation of a color. Our "photo of wood" texture looked the same as "photo of house" background.

What if we use a physical interpretation?

What about our "photo of wood"? Well, if we ignore the detail of the absolute reflectance not necessarily being correct due to us not knowing the exposure or lighting whtn the "photo of wood" being taken, it is still a rather useful way to reperesent wood reflectance.

So, if we shine some 1000's of lux of light onto a completely white surface, and expose the camera such that this turns into a perfectly white (without over-exposure) pixel on the screen, then applying this "photo of wood" as a texture map will yeild exactly the same result as the "oldschool" method. Basically, our camera exposure simply counter-acted the absolute luminance introduced by the light intensity.

So, in short, the "photo of wood" used interpreted as "reflectance" still works fine (well, no less "fine" than before).

What about us placing our "photo of house" as the background?

Well, now things start to change... we threw some thousands of lux onto the photo, creating a luminance likely in the thousands as well.... these values "in the thousands" was then converted to a range from black-to-white by the exposure.

So what happens with our "photo of house"? Nothing has told it to be anything than a 0-to-1 range thing. Basically, if interpreted strictly as luminance values, the exposure we are using (the one converting something in the thousands to white) will covert something in the range of 0-1 to.... pitch black. It won't even be enough to wiggle the lowest bit on your graphics card.

You will see nothing. Nada. Zip. BLACKNESS!

So, assuming you interpret oldschool "0-to-1" values directly as luminance, you will (for most common exposures) not see anything.

Now this is the default behaviour of the mr Photographic Exposure control!!

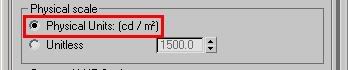

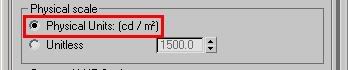

This setting (pictured above) from the mr Photographic Exposure will interpret any "oldschool" value directly as a cd/m^2 measurement. And will, with most useful exposures used for most scenes, result in the value "1" representing anything from complete blackness to near-imperceptible-near-blackness.

(I admit, I've considered many a time that making this mode the default was perhaps not the best choice)

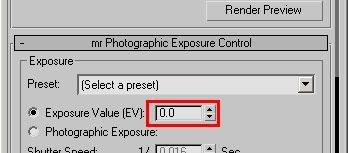

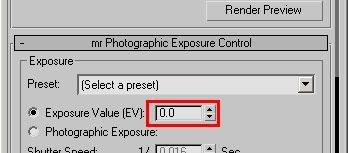

Simple. There are two routes to take:

Both of these methods equate to roughly the same result, but the former is a bit more "controlled". I.e. for a "background photo" you will need to boost it's intensity to be in the range of the "thousands" that may be necessary to show up for your current exposure. In the Output rollout, turn up "RGB Level" very high.

Of course, in an ideal world you would have your background photo taken at a known exposure, or where you had measured the luminance of some known surface in the photo, and you would set the scaling accordingly. Or even better, your background image is already a HDR image calibrated directly in cd/m^2.

But what of the other method?

When you are using the mr Phogographic Exposure control, and you switch to the "Unitless" mode...

...one can say that this parameter now is a "scale factor" between any "oldschool" value and physical values. So you can think of it as if any non-photometric value (say, pixel values of a background image, or light intensities of non-photometric lights) were roughly multiplied by this value before being interpreted as physical values. (This, however, is a simplification; under the hood it actually does the opposite, it is the physical values that are scaled in relation to the "oldschool" values, but since the exposure control exactly "undoes" this in the opposite direction, the apparent practical result of this is that it seems to "scale" any oldschool-0-1 values)

Which method to use is up to you. You may need to know a few things, though: The "cd/m^2" mode is a bit more controllable and exact. However, the "Unitless" mode is how the other Exposure controls, such as the Logarithmic, work by default. Hence, for a scene created with a logarithmic exposure, to get the same lighting balance between photometric things and "oldschool" things, you must use the unitless mode.

Furthermore, certain features of 3DS Max actually works incorrectly, indeed limiting their output to a "0-1" range. Notable here is many of the volumetric effects like fog. Hence, if the "cd/m^2 mode" is used, it blocks fog intensity to max out at "1 cd/m^2". This causes things like fog or volume light cones to appear black! That is bad.

Whereas in the "Unitless" mode, the value of "Physical Scale" basically defines how bright the "1.0" color "appears", and this gets around any limitation of the (unfortunately) clamping volume shaders.

BTW: I actually already covered this topic in an earlier post, but it seems that I needed to go into a bit more detail, since the question still arise quite often on various fora.

Hope this helps...

/Z

Color and Color

Without going too deep into the physics of photometric units (we did that already) we must understand that a normal color, an RGB value, i.e. a pixel color of some sort, can, fundamentally represent one of two different things:

- A reflectance value

- A luminance (radiance) measurement

The "luminance" of something is simply how bright it looks. It's probably the most intuitively understandable lighting unit. Basically, you can say that when you are looking at the pixels your digital camera spit out on your computer, you are looking at pixels as individual luminance measurements, converted back to luminance variations by your screen.

I.e. a given luminance of some real world object flows in through the lens of your camera, hits the imaging surface (generally a CCD of some kind) and gets converted to some value. This value (for the moment ignoring things like transfer curves, gamma, spectral responses and whatnot) is, in principle, proportional to the luminance of the pixel.

Now "reflectance" is how much something reflects. It is often also treated in computer graphics as a "color". Your "diffuse color" is really a "diffuse reflectance". It's not really a pixel value (i.e. a "luminance") until you throw some light at it to reflect.

And herein lies the problem; traditionally, computer graphics has been very lax with treating luminance and reflectance as interchangeable properties, and it's gotten away with this because it has ignored "exposure", and instead played with light intensity. You can tell you are working with "oldschool" rendering if your lights is of intensities like "1.0" or "0.2" or something like that, in some vague, unit-less property.

So when working "oldschool" style, if you took an image (some photo of a house, for example) and applied as a "background", this image was just piped through as is, black was black, white was white, and the pixels came through as before. Piece of cake.

Also, if you took a light of intensity "1.0" that shone perpendicular on a diffuse texture map (some photo of wood, I gather) will yield exactly those pixels back in the final render.

Basically, the wood "photo" was treated as "reflectance", which was then lit with something arbitrary ("1.0") and treated on screen as luminance. The house photo, however, was treated as luminance values directly.

But here's the problem: Reflectance is inherently limited in range from 0.0 to 1.0... i.e. a surface can never reflect more than 100% of the light it receives (ignoring fluorescence, bioluminescence, or other self-emissive properties), whereas actual real-world luminance knows no such bounds.

Now consider a photograph... a real, hardcopy, printed photograph. It actually reflects the light you shine on it. You can't watch a photograph in the dark. So what a camera does is to take values that are luminances, take it through some form of exposure (using different electronic gains, chemical responses, irises to block light, and modulating the amount of time the imaging surfaces is subjected to the available luminances). Basically, what the camera does, is to take a large range (0-to-infinity, pretty much) and convert it down to a low rang (0-to-1, pretty much).

When this is printed as a hardcopy, it indeed is basically converting luminances, via exposure, into a reflectance (of the hardcopy paper). But this is just a representation. How bright (actual luminance) the "white" part of the photographic hardcopy will be depends on how much light you shine on the photograph when you view it - just like how bright (actual lumiance) the "white" part of the softcopy viewed on the computer depends on the brighness knob of the monitor, the monitors available emissive power, etc.

The funny thing with the human visual system is that it is really adaptive, and can view this image and fully understand and decode the picture even though the absolute luminance of the "white" part of the image varies like that. Our eyes are really adaptive things. Spiffy, cool things.

So, the flow of data is:

- Actual luminance in the scene (world)

- going through lens/film/imaging surface and is "exposed" (by the various camera attributes) and

- becomes a low dynamic range representation on the screen, going from 0-to-1 (i.e. from 0% to 100% at whatever max brightness the monitor may have for the day).

So, now the problem.

In the oldschool world we could draw a near equivalence between the "reflectance" interpretation and the "luminance" interpretation of a color. Our "photo of wood" texture looked the same as "photo of house" background.

What if we use a physical interpretation?

What about our "photo of wood"? Well, if we ignore the detail of the absolute reflectance not necessarily being correct due to us not knowing the exposure or lighting whtn the "photo of wood" being taken, it is still a rather useful way to reperesent wood reflectance.

So, if we shine some 1000's of lux of light onto a completely white surface, and expose the camera such that this turns into a perfectly white (without over-exposure) pixel on the screen, then applying this "photo of wood" as a texture map will yeild exactly the same result as the "oldschool" method. Basically, our camera exposure simply counter-acted the absolute luminance introduced by the light intensity.

So, in short, the "photo of wood" used interpreted as "reflectance" still works fine (well, no less "fine" than before).

What about us placing our "photo of house" as the background?

Well, now things start to change... we threw some thousands of lux onto the photo, creating a luminance likely in the thousands as well.... these values "in the thousands" was then converted to a range from black-to-white by the exposure.

So what happens with our "photo of house"? Nothing has told it to be anything than a 0-to-1 range thing. Basically, if interpreted strictly as luminance values, the exposure we are using (the one converting something in the thousands to white) will covert something in the range of 0-1 to.... pitch black. It won't even be enough to wiggle the lowest bit on your graphics card.

You will see nothing. Nada. Zip. BLACKNESS!

So, assuming you interpret oldschool "0-to-1" values directly as luminance, you will (for most common exposures) not see anything.

Now this is the default behaviour of the mr Photographic Exposure control!!

This setting (pictured above) from the mr Photographic Exposure will interpret any "oldschool" value directly as a cd/m^2 measurement. And will, with most useful exposures used for most scenes, result in the value "1" representing anything from complete blackness to near-imperceptible-near-blackness.

(I admit, I've considered many a time that making this mode the default was perhaps not the best choice)

So how do we fix it

Simple. There are two routes to take:

- Simply "know what you are doing" and take into account that you need to apply the "luminance" interpretation at certain points, and scale the values accordingly.

- Play with the "Physical Scale" setting

Both of these methods equate to roughly the same result, but the former is a bit more "controlled". I.e. for a "background photo" you will need to boost it's intensity to be in the range of the "thousands" that may be necessary to show up for your current exposure. In the Output rollout, turn up "RGB Level" very high.

Of course, in an ideal world you would have your background photo taken at a known exposure, or where you had measured the luminance of some known surface in the photo, and you would set the scaling accordingly. Or even better, your background image is already a HDR image calibrated directly in cd/m^2.

But what of the other method?

Undersanding the 'Physical Scale' in 3ds Max

When you are using the mr Phogographic Exposure control, and you switch to the "Unitless" mode...

...one can say that this parameter now is a "scale factor" between any "oldschool" value and physical values. So you can think of it as if any non-photometric value (say, pixel values of a background image, or light intensities of non-photometric lights) were roughly multiplied by this value before being interpreted as physical values. (This, however, is a simplification; under the hood it actually does the opposite, it is the physical values that are scaled in relation to the "oldschool" values, but since the exposure control exactly "undoes" this in the opposite direction, the apparent practical result of this is that it seems to "scale" any oldschool-0-1 values)

When to use which mode

Which method to use is up to you. You may need to know a few things, though: The "cd/m^2" mode is a bit more controllable and exact. However, the "Unitless" mode is how the other Exposure controls, such as the Logarithmic, work by default. Hence, for a scene created with a logarithmic exposure, to get the same lighting balance between photometric things and "oldschool" things, you must use the unitless mode.

Furthermore, certain features of 3DS Max actually works incorrectly, indeed limiting their output to a "0-1" range. Notable here is many of the volumetric effects like fog. Hence, if the "cd/m^2 mode" is used, it blocks fog intensity to max out at "1 cd/m^2". This causes things like fog or volume light cones to appear black! That is bad.

Whereas in the "Unitless" mode, the value of "Physical Scale" basically defines how bright the "1.0" color "appears", and this gets around any limitation of the (unfortunately) clamping volume shaders.

BTW: I actually already covered this topic in an earlier post, but it seems that I needed to go into a bit more detail, since the question still arise quite often on various fora.

Hope this helps...

/Z

Labels:

Exposure,

Max,

Maya,

mental ray,

photometry,

physical sky

2007-11-05

More Hidden Gems: mia_envblur - glossy reflections of Environments

I thought initially I would title this post "Happiness is a Warm Gun", since it could have just as well have been the "black gun metal" tip, but I felt a more "searchable" name would be more useful...

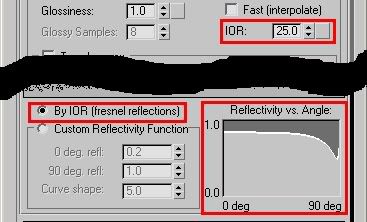

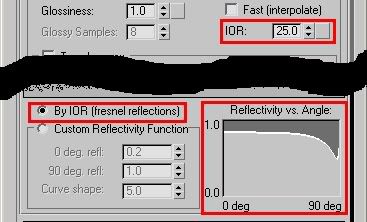

Glossy Reflections in computer graphics is always a headache, since it invariable involves multiple samples, which invariably involves grain. Interpolation helps, but interpolation isn't really working well for complex geometries, and can be "detectable" in animations (i.e. you can often "see" that something fishy is going on w. reflections, so I advice against using interpolated reflections in animations).

But, you say, I need to have my semi-glossy space robot/gunship/whatever and I need to animate it, and I have this really contrasty HDR environment it must reflect... I would need to turn up samples to crazy levels... the animation would never be finished! What can I do!?

This shader is another "sort of hidden" (depending on which application you are running, it's hidden away in 3ds Max but not in Maya, and it's existence isn't really marketed heavily... ;) )

Well, the documentation explains this fairly clearly, but in short:

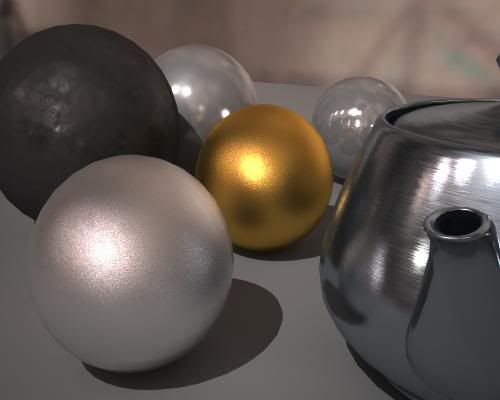

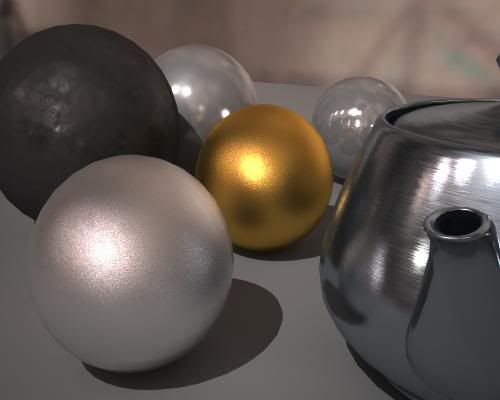

If you have some objects in a scene which largely reflects an environment map (this bit is important; mia_envblur really dosn't do anything useful for, say, an architectural interior where objects reflect other objects, it only does it's job for environment reflections) and your environment is high-contrast, you tend to get grain as a result. Witness here some spheres n' stuff reflecting the good old "Galileo's Tomb" probe from Paul Debevec:

Grusomely Grainy

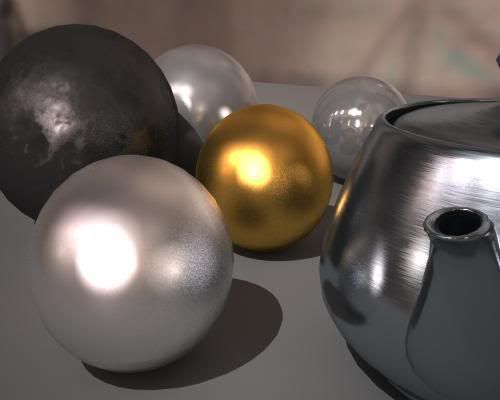

This is clearly not satisfactory, the grain is very heavy, causing slow renders due to a lot of oversampling happening. We can turn up the sampling in the shaders and get something like this:

Borderline Better

This looks nicer indeed, but the render time was nearly ten times of the above! Not something you'd want to do for an extended animation. And it would still glitter and creep around. Not good.

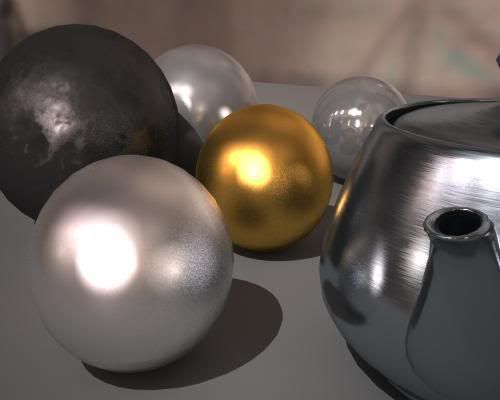

So... enter mia_envblur. The shader is used to "wrap around" some existing environment shader, i.e. it takes some other environment shader, rasterizes it into an internal mipmap, and can perform intelligent filtered lookups in a special coordinate space which behaves very close to doing actual glossy lookups to that environment with a near-infinte amount of rays!

Soothingly Smooth!

This is much nicer looking, and the environment reflections are smooth (but object-to-object reflections still involve multi-sampling and can have some grain. A trick around that is to limit the reflection distance as mentioned in the manual).

To use mia_envblur, you must follow these steps:

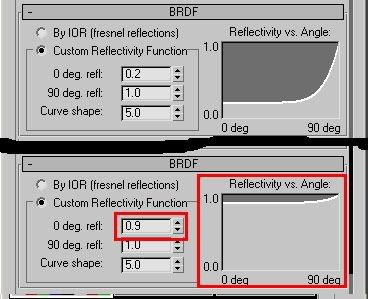

As for the actual blur amount, you have two choices; Either you set a fixed amount of blur with the mia_envblur parameter Blur. But that is a bit boring... if you have different materials of different glossiness, you would need separate copies with different blur ammounts applies as environment maps to each materials... very tedious...!

Much better is to turn on the mia_material_blur parameter on!

This will drive the amount of blur from the glossiness setting of the mia_material. This works such, that mia_material "informs" the environment shader which glossiness is used for a certain environment lookup, and hence allows a single global copy of mia_envblur (applied in the global (camera) Environment slot) to follow the glossiness of multiple mia_materials, or even map-driven glossiness on a single material:

Very Visible Variable Varnishness

I can't stress enough how varying the glossiness with a map gives a nice feel of wear, tear and "fingerprintiness" of an object. And with mia_envblur you can do this without being ashamed and fear horrid render times.

Yes, mia_envblur works great together with the production shaders. Just "wrap" it around your mip_mirrorball shader, to do glossy lookups into it.

Perfect Production Partner

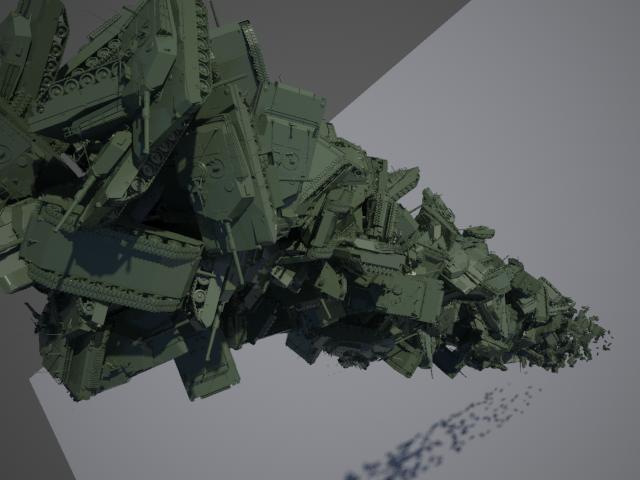

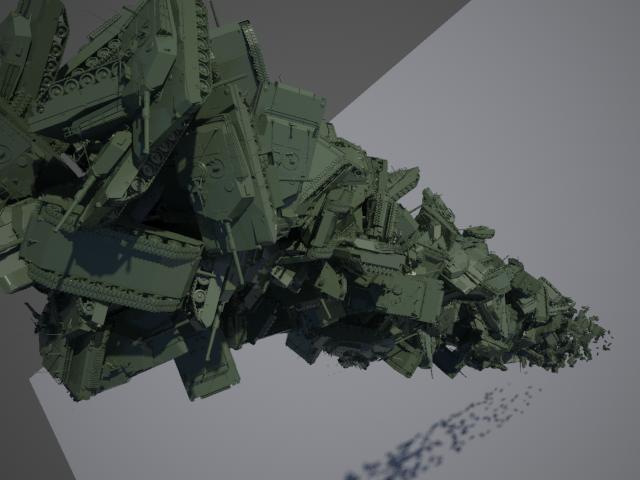

This spaceship (from TurboSquid) is mapped with a glossy reflection that is heavily varied (A whole "make a spaceship in the backyard" tutorial will probably appear later, if I find time).

Yes it does. Just a word of warning: be certain that the resolution parameter is set high enough to resolve all details in the environment you use. The "Gallileo" probe I use here has too many small lights in it for the default of "200" to be enough (this means the map is rasterized into a 200x200 pixel mipmap). But at 600 it works great. Also note that any non-glossy lookups into the environment map actually bypasses mia_envblur automatically, so these are looked up in the full resolution map!

See for yourself in this DivX test animation, which includes above spaceship hovering over my backyard, the above gun-metal-black battle robot (also from from TurboSquid) and some spheres, including my attempt to do a "gnarly cannon ball".

For the record, these renders also uses the photographic exposure and Lume "Glare" shader. The animations rendered at about 1 minute per frame (on a HP x6400 Quad Core)

Hope this helps your glossy-thing-renders!

/Z

Formulating The Problem

Glossy Reflections in computer graphics is always a headache, since it invariable involves multiple samples, which invariably involves grain. Interpolation helps, but interpolation isn't really working well for complex geometries, and can be "detectable" in animations (i.e. you can often "see" that something fishy is going on w. reflections, so I advice against using interpolated reflections in animations).

But, you say, I need to have my semi-glossy space robot/gunship/whatever and I need to animate it, and I have this really contrasty HDR environment it must reflect... I would need to turn up samples to crazy levels... the animation would never be finished! What can I do!?

The solution: mia_envblur

This shader is another "sort of hidden" (depending on which application you are running, it's hidden away in 3ds Max but not in Maya, and it's existence isn't really marketed heavily... ;) )

What does it do?

Well, the documentation explains this fairly clearly, but in short:

If you have some objects in a scene which largely reflects an environment map (this bit is important; mia_envblur really dosn't do anything useful for, say, an architectural interior where objects reflect other objects, it only does it's job for environment reflections) and your environment is high-contrast, you tend to get grain as a result. Witness here some spheres n' stuff reflecting the good old "Galileo's Tomb" probe from Paul Debevec:

Grusomely Grainy

This is clearly not satisfactory, the grain is very heavy, causing slow renders due to a lot of oversampling happening. We can turn up the sampling in the shaders and get something like this:

Borderline Better

This looks nicer indeed, but the render time was nearly ten times of the above! Not something you'd want to do for an extended animation. And it would still glitter and creep around. Not good.

So... enter mia_envblur. The shader is used to "wrap around" some existing environment shader, i.e. it takes some other environment shader, rasterizes it into an internal mipmap, and can perform intelligent filtered lookups in a special coordinate space which behaves very close to doing actual glossy lookups to that environment with a near-infinte amount of rays!

Soothingly Smooth!

This is much nicer looking, and the environment reflections are smooth (but object-to-object reflections still involve multi-sampling and can have some grain. A trick around that is to limit the reflection distance as mentioned in the manual).

To use mia_envblur, you must follow these steps:

- Where your existing environment shader is connected, instead connect mia_envblur, and connect your original environment into it's environment input.

- In your mia_material, turn on it's "single_env_sample" parameter. This is very important or you will gain neither speed nor quality (although you will get a little bit of smoothing, but not supersmoothing of the reflections)

- Set the "resolution" high enough to resolve the details of your original environment.

As for the actual blur amount, you have two choices; Either you set a fixed amount of blur with the mia_envblur parameter Blur. But that is a bit boring... if you have different materials of different glossiness, you would need separate copies with different blur ammounts applies as environment maps to each materials... very tedious...!

Much better is to turn on the mia_material_blur parameter on!

This will drive the amount of blur from the glossiness setting of the mia_material. This works such, that mia_material "informs" the environment shader which glossiness is used for a certain environment lookup, and hence allows a single global copy of mia_envblur (applied in the global (camera) Environment slot) to follow the glossiness of multiple mia_materials, or even map-driven glossiness on a single material:

Very Visible Variable Varnishness

I can't stress enough how varying the glossiness with a map gives a nice feel of wear, tear and "fingerprintiness" of an object. And with mia_envblur you can do this without being ashamed and fear horrid render times.

Production Shaders?

Yes, mia_envblur works great together with the production shaders. Just "wrap" it around your mip_mirrorball shader, to do glossy lookups into it.

Perfect Production Partner

This spaceship (from TurboSquid) is mapped with a glossy reflection that is heavily varied (A whole "make a spaceship in the backyard" tutorial will probably appear later, if I find time).

Does it move?

Yes it does. Just a word of warning: be certain that the resolution parameter is set high enough to resolve all details in the environment you use. The "Gallileo" probe I use here has too many small lights in it for the default of "200" to be enough (this means the map is rasterized into a 200x200 pixel mipmap). But at 600 it works great. Also note that any non-glossy lookups into the environment map actually bypasses mia_envblur automatically, so these are looked up in the full resolution map!

See for yourself in this DivX test animation, which includes above spaceship hovering over my backyard, the above gun-metal-black battle robot (also from from TurboSquid) and some spheres, including my attempt to do a "gnarly cannon ball".

For the record, these renders also uses the photographic exposure and Lume "Glare" shader. The animations rendered at about 1 minute per frame (on a HP x6400 Quad Core)

Hope this helps your glossy-thing-renders!

/Z

2007-10-30

Production Shader Examples

So, who wants to know more about the production shaders? Raise of hands? (See the introductory post, if you missed that.)

OK, I don't have time for an extravagant essay right now, but what I did do is to put a set of examples online.

The example uses some geometry (in some cases, our friend "Robo" pictured here on the right) and shows how to use this together with the production shaders, both to introduce our geometry into various backgrounds, as well as using features like the motion blur and motion vector code.

The Production library does a lot of things, but one of it's specialties is to help us to integrate a CG object into a photographic background, with the help of a photo of the background and a photo of a mirror ball taken at the same location in the same camera angle as the background photo. So, to play with that, we need a set of backgrounds, with matching mirror ball photos.

As luck would have it, I happen to have just that. (Amazing, innit?) ;)

These backgrounds are available in this backgrounds.zip file. Please download that and unzip before downloading any of the demos scenes (I also apologize for not having time to put Robo into any of the Maya scenes, but he was out at a party the day I made those file and didn't come home until late....)

If you don't want to read, but play play play, you can go directly to this directory and you will find the files.

The 3ds Max demo scenes are sort of "all in one" demos, demonstrating a scene using the mip_matteshadow, mip_rayswitch_environment, mip_cameramap and mip_mirrorball to put a CG object into a real background as described in the PDF docs.

The file robot-1.max puts Robo in my back yard, robot-2.max puts him on my dining room table, robot-3.max on a window ledge, robot-4.max out in a gravel pit (how absolutely charming place to hang out) and finally robot-5.max on my dining room table but at night, and some alien globules has landed...

They all work pretty much the same, i.e. the same settings only swapping in different backgrounds and mirror ball photos from the backgrounds.zip file.

The exception is the file robot-4-alpha.max which demonstrates how to do the same as robot-4.max does, but set up for external compositing (see more details below in the Maya section).

The examples for Maya are more "single task" examples, and demonstrates one thing at a time.

mip_matteshadow1.ma and mip_matteshadow2.ma both demonstrate how to put a set of CG objects into a real background, using the exact same techniques as for 3ds Max above:

The file mip_matteshadow2b.ma demonstrate the same scene as mip_matteshadow2 but set up for external compositing (what is called "Best of Both Worlds" in the manual).

To recap from the manual briefly: In the normal mode (when you composite directly in the rendering and get a final picture including the background right out of the renderer, you use mip_cameramap in the background slot of your mip_matteshadow material, and in your global environment (in the Camera in Maya, in the "Environment" dialog in 3ds Max) you put in a mip_rayswitch_environment, which is being fed the same mip_cameramap into it's background slot, but into it's environment slot it is being fed a mip_mirrorball.

To do the "Best of Both Worlds" mode (to get proper alpha for external compositing, and not see the background photo in the rendering - but yet see its effects, its bounce light, its lighting, its reflections, etc. - one need to do a couple of changes from the above setup:

Having done this (as is already is set up in the mip_matteshadow2b.ma example) you will get this rendering:

This image contains all the reflections of the forest, the bounce light from the forest, the reflection of the environment from the mirror ball... but doesn't actually contain the background itself.

However, it has an alpha channel that looks like this...

...which as you see contains all the shadows. So compositing this image directly on top of the background in a compositing application, will give you the same result as the full image above, except with greater control of color balance etc. in post.

There is three further example files for Maya:

mip_motionblur.ma, which demonstrates the motion blur, and mip_motionvector.ma and mip_motionvector2.ma who both demonstrate how to output motion vectors.

I know these are rudimentary examples, but the day only has 48 hours... ;)

To quote the Governator: "I'll be bak".

/Z

OK, I don't have time for an extravagant essay right now, but what I did do is to put a set of examples online.

The example uses some geometry (in some cases, our friend "Robo" pictured here on the right) and shows how to use this together with the production shaders, both to introduce our geometry into various backgrounds, as well as using features like the motion blur and motion vector code.

General Overview

The Production library does a lot of things, but one of it's specialties is to help us to integrate a CG object into a photographic background, with the help of a photo of the background and a photo of a mirror ball taken at the same location in the same camera angle as the background photo. So, to play with that, we need a set of backgrounds, with matching mirror ball photos.

As luck would have it, I happen to have just that. (Amazing, innit?) ;)

These backgrounds are available in this backgrounds.zip file. Please download that and unzip before downloading any of the demos scenes (I also apologize for not having time to put Robo into any of the Maya scenes, but he was out at a party the day I made those file and didn't come home until late....)

In a hurry?

If you don't want to read, but play play play, you can go directly to this directory and you will find the files.

Examples for 3ds Max 2008

The 3ds Max demo scenes are sort of "all in one" demos, demonstrating a scene using the mip_matteshadow, mip_rayswitch_environment, mip_cameramap and mip_mirrorball to put a CG object into a real background as described in the PDF docs.

The file robot-1.max puts Robo in my back yard, robot-2.max puts him on my dining room table, robot-3.max on a window ledge, robot-4.max out in a gravel pit (how absolutely charming place to hang out) and finally robot-5.max on my dining room table but at night, and some alien globules has landed...

They all work pretty much the same, i.e. the same settings only swapping in different backgrounds and mirror ball photos from the backgrounds.zip file.

The exception is the file robot-4-alpha.max which demonstrates how to do the same as robot-4.max does, but set up for external compositing (see more details below in the Maya section).

Examples for Maya 2008

The examples for Maya are more "single task" examples, and demonstrates one thing at a time.

mip_matteshadow1.ma and mip_matteshadow2.ma both demonstrate how to put a set of CG objects into a real background, using the exact same techniques as for 3ds Max above:

The file mip_matteshadow2b.ma demonstrate the same scene as mip_matteshadow2 but set up for external compositing (what is called "Best of Both Worlds" in the manual).

To recap from the manual briefly: In the normal mode (when you composite directly in the rendering and get a final picture including the background right out of the renderer, you use mip_cameramap in the background slot of your mip_matteshadow material, and in your global environment (in the Camera in Maya, in the "Environment" dialog in 3ds Max) you put in a mip_rayswitch_environment, which is being fed the same mip_cameramap into it's background slot, but into it's environment slot it is being fed a mip_mirrorball.

To do the "Best of Both Worlds" mode (to get proper alpha for external compositing, and not see the background photo in the rendering - but yet see its effects, its bounce light, its lighting, its reflections, etc. - one need to do a couple of changes from the above setup:

- In the global Environment should still be a mip_rayswitch_environment as before, the only difference is that instead of putting mip_cameramap into it's background slot, you put transparent black (0 0 0 0).

The trick in Maya is that you cannot put an alpha value into a color slot. We can cheat this by using the mib_color_alpha from the base shaders, and set its multiplier to 0.0. - In the background slot of your mip_matteshadow you used to have a mip_cameramap with your background. What you do instead, is to put in another mip_rayswitch_environment, and into it's environment slot (important, yes, the environment and NOT the background!) you put back the mip_cameramap with your background photo, and in it's background slot you again put transparent black (using same trick as above).

Having done this (as is already is set up in the mip_matteshadow2b.ma example) you will get this rendering:

This image contains all the reflections of the forest, the bounce light from the forest, the reflection of the environment from the mirror ball... but doesn't actually contain the background itself.

However, it has an alpha channel that looks like this...

...which as you see contains all the shadows. So compositing this image directly on top of the background in a compositing application, will give you the same result as the full image above, except with greater control of color balance etc. in post.

Maya in Motion

There is three further example files for Maya:

mip_motionblur.ma, which demonstrates the motion blur, and mip_motionvector.ma and mip_motionvector2.ma who both demonstrate how to output motion vectors.

I know these are rudimentary examples, but the day only has 48 hours... ;)